Example LLaVa Multi-Modal Model Deployment w/GPU

In this example, we'll deploy the LLaVa multi-modal vision and language model to a REST endpoint.

Setup

We recommend using Google Colab notebook with high memory and a T4 GPU for this example. Because finding A100s on Colab can be hit-or-miss, we'll start by using a version that fits on a T4. Scroll down for a guide to deploying a larger version.

First, install the accelerate, bitsandbytes, transformers, Pillow, and modelbit packages:

!pip install accelerate bitsandbytes transformers Pillow modelbit

Import your dependencies:

from transformers import AutoProcessor, LlavaForConditionalGeneration

from huggingface_hub import snapshot_download

from PIL import Image

import requests

import bitsandbytes

import accelerate

import modelbit

And log into Modelbit:

mb = modelbit.login()

Finally, download the LLaVa weights from HuggingFace:

snapshot_download(repo_id="llava-hf/llava-1.5-7b-hf", local_dir="/content/llava-hf")

Building the model and performing an inference

First we'll load the model using modelbit.setup:

with modelbit.setup(name="load_model"):

model = LlavaForConditionalGeneration.from_pretrained("./llava-hf",

local_files_only=True,

load_in_8bit=True)

processor = AutoProcessor.from_pretrained("./llava-hf", local_files_only=True, load_in_8bit=True)

Note load_in_8bit=True, which quantizes the model to fit in VRAM in a T4 GPU.

Next we'll write our function that prompts the model and returns the result:

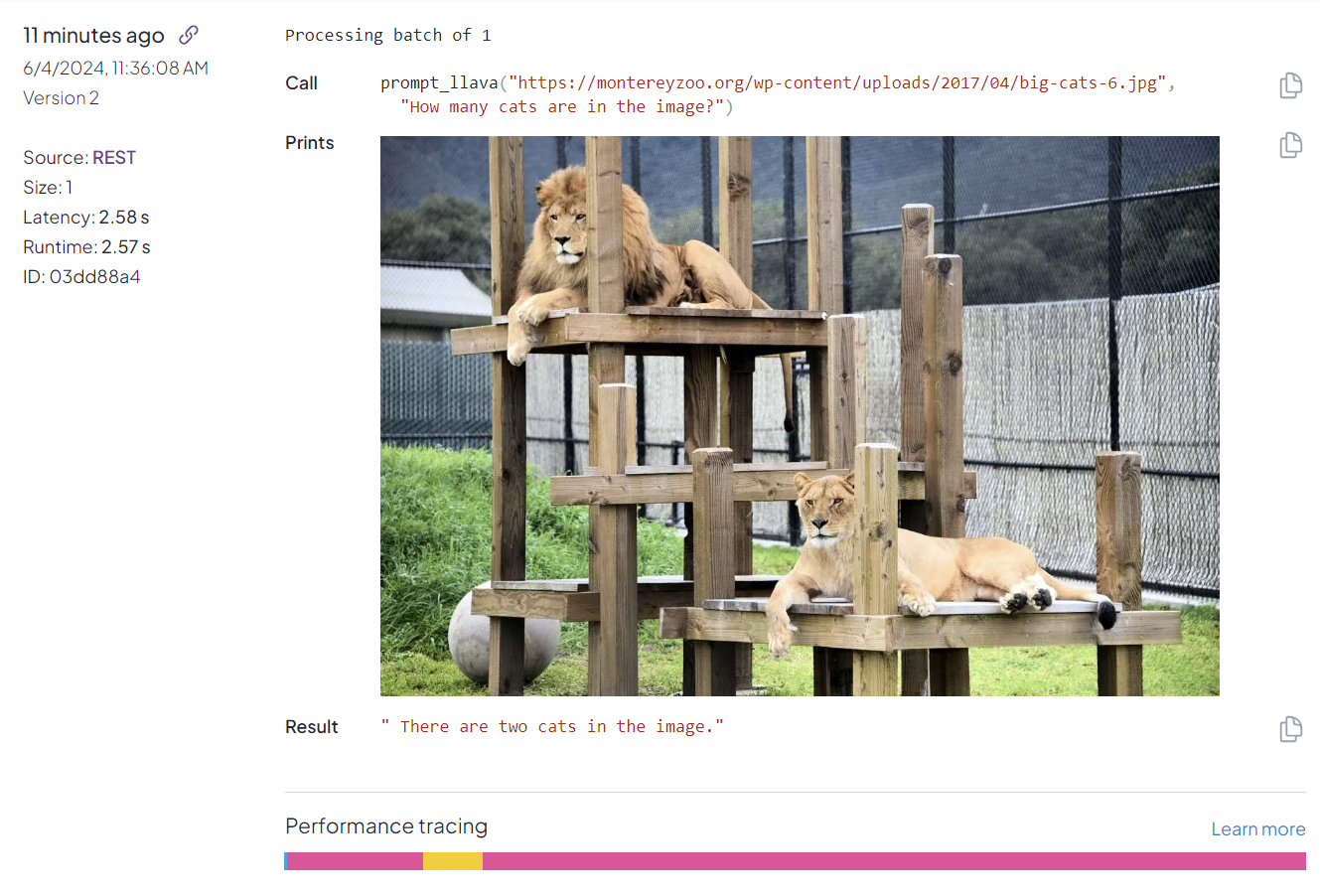

def prompt_llava(url: str, prompt: str):

image = Image.open(requests.get(url, stream=True).raw)

mb.log_image(image) # Log the input image in Modelbit

full_prompt = f"USER: <image>\n{prompt} ASSISTANT:"

inputs = processor(text=full_prompt, images=image, return_tensors="pt").to("cuda")

generate_ids = model.generate(**inputs, max_new_tokens=15)

response = processor.batch_decode(generate_ids,

skip_special_tokens=True,

clean_up_tokenization_spaces=False)[0].split("ASSISTANT:")[1]

return response

This function downloads the picture from the URL and prompts LLaVa with the picture and the text prompt, returning just the model's response.

Test your model by calling prompt_llava:

prompt_llava("https://doc.modelbit.com/img/cat.jpg", "What animals are in this picture?")

Deployment

From here, deployment to a REST API is just one line of code:

mb.deploy(prompt_llava,

extra_files=["llava-hf"],

python_packages=["bitsandbytes==0.43.1", "accelerate==0.32.1"],

setup="load_model",

require_gpu=True)

We want to make sure to bring along the weight files from the llava-hf directory using the extra_files parameter.

And we want to specify bitsandbytes and accelerate dependencies because transformers needs them in this case but does not specify that dependency.

Finally, of course, this model requires a GPU.

Using a base64-encoded image instead of an image URL

It may be more convenient or more performant to encode the image itself into the REST request, instead of passing an image URL.

In that case, we'll add a couple more imports to our code:

import base64

from io import BytesIO

We'll update the definition of our inference function to make it clear we expect base64-encoded image bytes, and the second line of the inference function to load the image directly from the passed-in value.

Here's the entire updated inference function:

def prompt_llava(image_b64: str, prompt: str):

image = Image.open(BytesIO(base64.b64decode(image_b64)))

mb.log_image(image)

full_prompt = f"USER: <image>\n{prompt} ASSISTANT:"

inputs = processor(text=full_prompt, images=image, return_tensors="pt").to("cuda")

generate_ids = model.generate(**inputs, max_new_tokens=15)

response = processor.batch_decode(generate_ids,

skip_special_tokens=True,

clean_up_tokenization_spaces=False)[0].split("ASSISTANT:")[1]

return response

Note that the model and processor from before are unchanged.

If we have that same cat.jpg file locally, we can test our function like so:

def b64_image(path: str) -> str:

with open(path, "rb") as f:

return base64.b64encode(f.read()).decode()

response = prompt_llava(b64_image("cat.jpg"), "How many cats are in the image?")

print(response)

Finally, the code to deploy the model is the same:

mb.deploy(prompt_llava,

extra_files=["llava-hf"],

python_packages=["bitsandbytes==0.43.1", "accelerate==0.30.1"],

setup="load_model",

require_gpu=True)

And to call the model using Modelbit's Python API, the code would be:

response = mb.get_inference(deployment="prompt_llava",

workspace="<Your Workspace Name>",

data=[b64_image("cat.jpg"), "How many cats are in the image?"])

print(response)

Deploying a larger version

If you can get an A100 from Colab, you can build a larger (non-quantized) version of the model and deploy that to Modelbit!

To do so, simply remove load_in_8bit=True in your from_pretrained calls. Since quantized models automatically load into CUDA but default models do not, you'll also need to add .to("cuda") to your model construction. Here's the new setup definition:

with modelbit.setup(name="load_model"):

model = LlavaForConditionalGeneration.from_pretrained("./llava-hf", local_files_only=True).to("cuda")

processor = AutoProcessor.from_pretrained("./llava-hf", local_files_only=True)

The inference function is unchanged. Finally, when deploying, make sure you specify a large enough GPU:

mb.deploy(prompt_llava,

extra_files=["llava-hf"],

setup="load_model",

require_gpu="A10G")

No need for accelerate or bitsandbytes since we're no longer quantizing.

You can now call your LLaVa model from its production REST endpoint!